There’s a growing curiosity surrounding AI voice agents and their ability to comprehend human emotions. You may wonder if these advanced technologies can truly understand your sentiments and respond empathetically. In this blog post, we will explore the current capabilities of AI voice agents, the significance of emotional intelligence in human interactions, and whether these systems can genuinely connect with you on a deeper level. Join us as we research into the fascinating intersection of technology and emotional understanding.

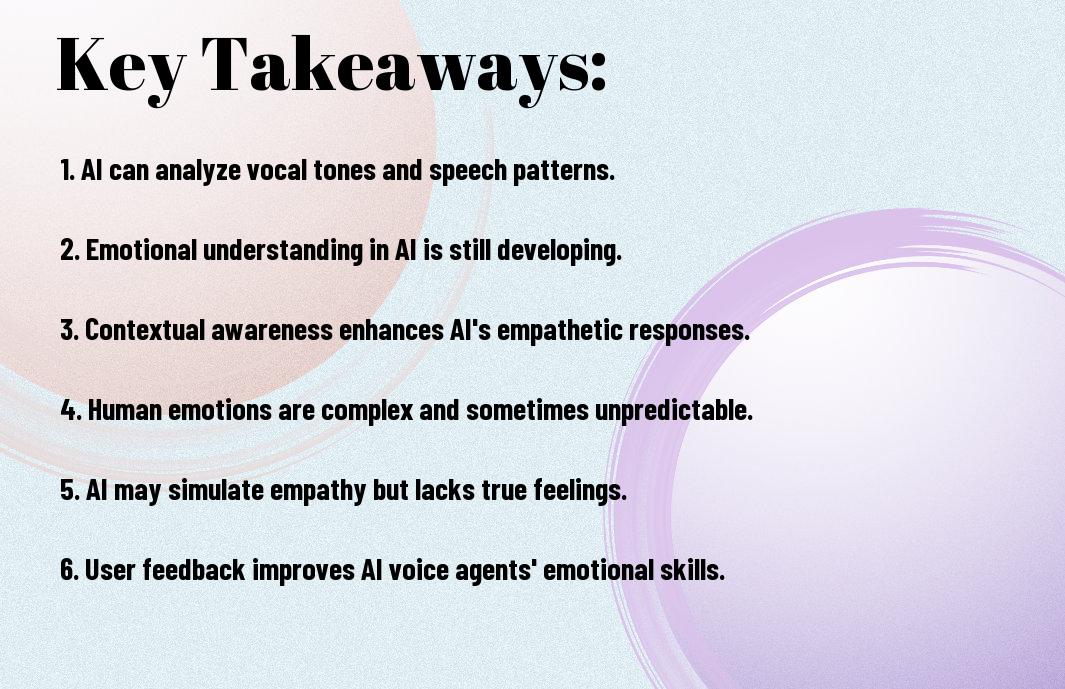

Key Takeaways:

- Emotional Detection: AI voice agents are designed to recognize and respond to human emotions through voice tone, pitch, and speech patterns.

- Limitations: Despite advancements, current AI systems struggle with contextual understanding and nuanced emotional expressions.

- Human-Like Interaction: Improving emotional intelligence in AI can enhance user experience by making interactions feel more natural and empathetic.

- Ethical Considerations: The deployment of emotionally intelligent AI raises ethical concerns regarding manipulation and privacy in user interactions.

- Future Potential: Ongoing research aims to improve AI’s emotional comprehension, potentially leading to more advanced and intuitive voice agents.

Understanding Emotional Intelligence

As you navigate the complex world of human interactions, emotional intelligence plays a pivotal role in understanding not just your own emotions but those of others as well. This multifaceted skill set encompasses the ability to recognize, evaluate, and respond to emotions in a meaningful way, impacting your relationships and decision-making processes.

Definition of Emotional Intelligence

Around the early 1990s, psychologists coined the term “emotional intelligence,” defining it as the capacity to recognize, understand, and manage your own emotions while also being attuned to the emotions of others. This set of skills is important for effective communication and relationship-building, allowing you to navigate emotional landscapes with greater ease.

Components of Emotional Intelligence

Intelligence in emotional contexts comprises several key components, including self-awareness, self-regulation, motivation, empathy, and social skills. Each of these elements contributes to your overall ability to handle emotions positively and effectively.

This framework of emotional intelligence allows you to enhance your interpersonal interactions significantly. Self-awareness enables you to identify your emotional states, while self-regulation helps you control impulsive feelings and behaviors. Motivation drives your perseverance towards goals, and empathy allows you to connect with others on a deeper level. Finally, strong social skills empower you to manage relationships and communicate effectively, facilitating a more harmonious environment in both personal and professional realms.

The Role of AI in Voice Technology

Some of the most advanced voice technologies today rely heavily on AI to enhance user interaction and responsiveness. By integrating deep learning and natural language processing, AI voice agents are making conversations more natural and intuitive. For an insightful overview, check out Emotion AI, explained. This emerging field aims to equip AI with better emotional understanding, allowing for richer and more empathetic interactions.

Voice Agents and Their Capabilities

Along with their ability to recognize speech, voice agents can now analyze sentiment and context, making them increasingly reliable for everyday tasks. They offer personalized responses based on your preferences, which enhances the overall user experience. As these technologies progress, you may find that your interactions feel more human-like.

The Evolution of AI Voice Recognition

About a decade ago, AI voice recognition was primarily limited to basic command execution, with limited understanding of context or emotion. Today, advancements allow systems to process natural language more effectively, paving the way for sophisticated applications. This progress offers you a more seamless way to engage with technology.

Technology has transformed AI voice recognition from simple command-response systems to highly sophisticated conversational agents that understand complex sentences and emotional nuances. With the implementation of machine learning algorithms, these agents continuously improve their understanding of your speech patterns and preferences, leading to a more personalized experience. As you interact with these technologies, you’ll find they become better attuned to your voice and emotions, enhancing communication and usability.

Emotional Analysis in AI Voice Agents

To effectively leverage AI voice agents, understanding their emotional analysis capabilities is important. While these agents employ sophisticated algorithms to interpret users’ emotions, there are inherent challenges in accurately capturing human feelings. You can explore more about the complexity of this process in The Risks of Using AI to Interpret Human Emotions.

Techniques for Emotion Detection

Analysis of user interaction data plays a significant role in emotion detection, as AI voice agents utilize natural language processing (NLP), tone analysis, and contextual understanding to identify emotional cues. By analyzing speech patterns and word choices, they aim to gauge your mood and intention, enhancing their interaction quality.

Challenges in Emotion Recognition

After years of development, AI still struggles with accurately recognizing emotions due to various factors, including cultural differences and nuanced human expressions. The distortion in speech caused by environmental factors or personal circumstances adds additional complexity to emotion detection.

Understanding the hurdles in emotion recognition is vital as these challenges can lead to misinterpretations that affect user experience. AI may misread sarcasm, humor, or frustration, resulting in responses that feel disconnected or inappropriate. Furthermore, emotions are inherently complex and can vary from one individual to another, making it difficult for a standardized model to gauge emotional intent effectively. You should be aware that these limitations can impact the reliability of interactions with AI voice agents.

AI Voice Agents in Real-World Applications

Keep in mind that AI voice agents are making significant strides across various industries, transforming how we interact with technology. Whether used in customer service, mental health support, or personal assistance, these agents aim to provide a more seamless and human-like experience. Their ability to understand context and emotions is instrumental in enhancing user engagement and satisfaction, proving they’re becoming an integral part of daily life.

Customer Service and Support

Before venturing into the benefits, it’s important to understand how AI voice agents are revolutionizing customer service and support. These agents handle inquiries and provide solutions rapidly, allowing you to receive assistance any time of day without the frustration of long wait times. Their ability to analyze customer sentiment enables them to respond appropriately, ensuring a more personalized experience.

Mental Health and Wellbeing

Beside customer service applications, AI voice agents are also emerging as valuable tools in the mental health and wellbeing sector. They can provide immediate support when you need someone to talk to, offering resources and helping you manage your emotional states more effectively.

At the forefront of mental health support, AI voice agents can engage you in conversations that encourage self-reflection and emotional processing. Some applications allow you to track your mood over time, offering personalized feedback and coping strategies. This technology can serve as a supplementary tool for mental health professionals, making support more accessible while enabling you to express your feelings and thoughts in a non-judgmental space.

User Perceptions of AI Emotional Understanding

Despite the advancements in AI technology, user perceptions of AI’s emotional understanding vary significantly. Many users exhibit a mix of skepticism and optimism, questioning whether AI can truly grasp the nuances of human emotions. This complexity is influenced by personal experiences with AI and societal attitudes towards technology. You may find that your trust in AI’s emotional capabilities often hinges on its performance and reliability in real-world interactions, leading to a diverse range of expectations from different individuals.

Trust and Acceptance of AI Agents

On the other hand, your trust and acceptance of AI agents are imperative for their effective integration into daily life. Trust can develop through consistent positive experiences, but any perceived shortcomings in emotional understanding may lead to hesitancy. You might find that your willingness to use AI for emotional support is influenced by how well it resonates with your feelings and needs. Acceptance grows when AI shows reliability and empathy, thereby creating a more comforting interaction.

Cases of Misinterpretation

One significant challenge in the perception of AI emotional understanding is the frequency of misinterpretation. Despite AI’s ability to analyze patterns, it can still miss the nuances of human emotion, leading to misguided responses.

User experiences with AI often reveal instances where emotional cues are misunderstood, resulting in responses that feel out of touch or inappropriate. These misinterpretations can stem from the limitations of AI’s algorithms and its reliance on contextual data that may not fully capture your emotional state. For example, a user expressing sadness might receive a generic motivational response rather than the empathetic acknowledgment they seek. This gap can hinder effective communication and create frustration, ultimately impacting your overall trust in AI emotional intelligence.

Ethical Implications of AI Emotional Intelligence

After considering the advances in AI emotional intelligence, one must also reflect on the ethical implications that arise. These systems, designed to mimic human empathy, may lead us to question the reliability of their responses. For a deeper probe this topic, check out Do We Need Emotionally Intelligent AI?

Privacy and Data Security Concerns

Emotional AI relies heavily on analyzing user data to gauge feelings and reactions, raising significant privacy concerns. You may be wondering what happens to your sensitive data and how it is protected. Without proper measures in place, your emotions could be stored, analyzed, and potentially misused, compromising your privacy and security.

Potential for Manipulation

The increasing sophistication of AI emotional intelligence opens doors to manipulation risks. If these systems can accurately gauge your emotions, they may be able to influence your decisions or behaviors, ultimately eroding your autonomy. Picture receiving personalized ads that exploit your emotional vulnerabilities—this not only raises ethical questions but also poses risks to your well-being.

Privacy concerns intertwine with the potential for manipulation. If AI emotionally intelligent systems access your personal data, you could be influenced in ways you might not even realize, making it imperative to establish clear guidelines and protections. By understanding how your emotions can be exploited, you can be more proactive in safeguarding your interests in an increasingly digital world.

Final Words

Following this exploration of AI voice agents and their emotional intelligence, you can appreciate that while these technologies excel in recognizing patterns and responding to cues, their understanding of human emotions remains limited. As you engage with these systems, it’s vital to approach them as tools rather than replacements for genuine human interaction. By leveraging AI thoughtfully, you can enhance your experiences while remaining aware of its boundaries in emotional comprehension.

FAQ

Q: What are AI voice agents and how do they work in terms of emotional intelligence?

A: AI voice agents are software systems designed to interact with users through spoken language, utilizing natural language processing (NLP) to understand and generate human-like responses. They work by analyzing the tone, pitch, and speech patterns of users to gauge emotional states, enabling them to respond more appropriately. These agents use sentiment analysis algorithms to interpret the underlying emotions based on verbal cues, making it possible for them to engage in conversations that feel more personal and empathetic.

Q: Can AI voice agents genuinely understand human emotions or merely simulate empathy?

A: While AI voice agents can identify and respond to emotional cues, their understanding is fundamentally different from human empathy. These systems simulate empathetic responses based on patterns and data from past interactions, allowing them to create a semblance of emotional understanding. However, they lack consciousness and personal experiences that characterize human emotion. Therefore, while they can appear empathetic in their interactions, this is a result of programmed responses rather than true emotional comprehension.

Q: What are the limitations of AI voice agents concerning emotional insights?

A: The limitations of AI voice agents include their reliance on data, which means they can misinterpret context or nuances in human emotion. They may struggle with sarcasm, cultural differences, or complex emotional expressions that require a deeper understanding. Additionally, their ability to handle obscure or unforeseen situations is limited, as they can only operate within their programmed frameworks. As a result, while they can enhance user experiences, their capability to fully grasp the complexities of human emotions is restricted.